Good day, everyone

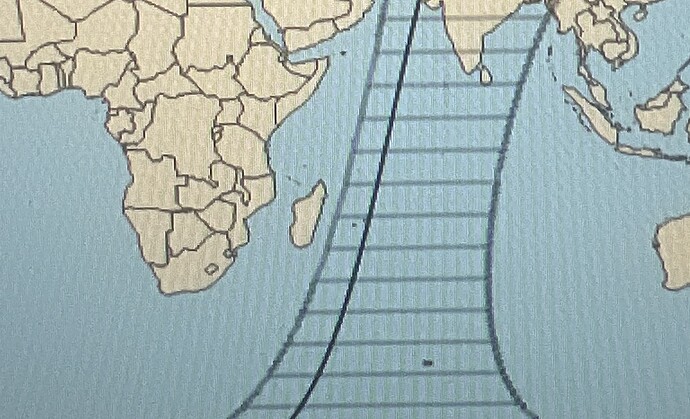

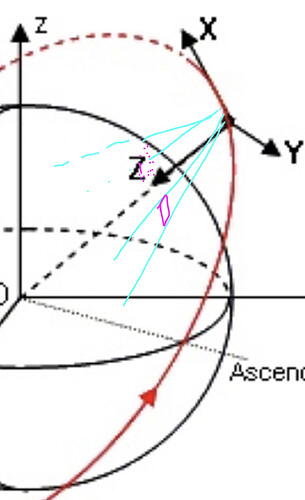

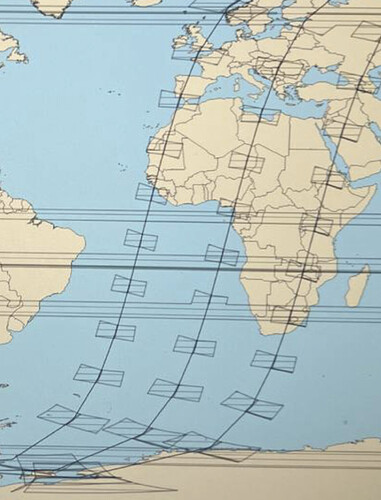

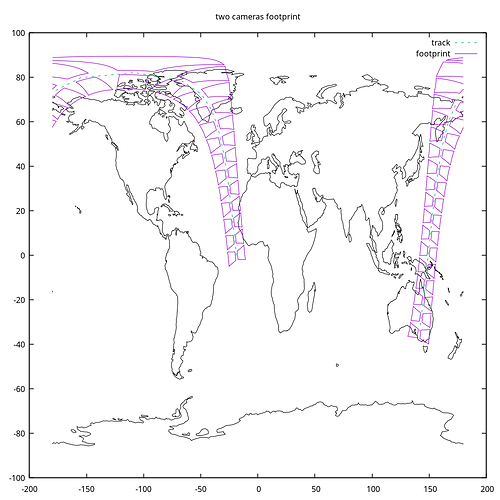

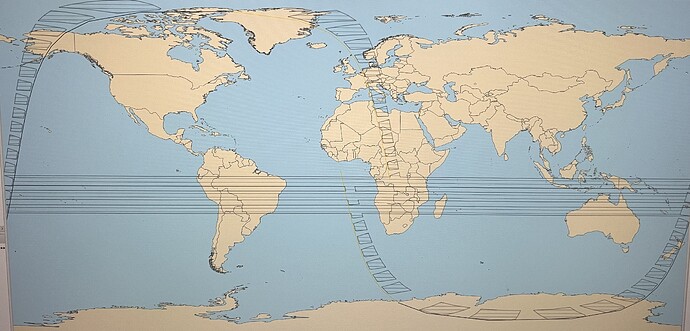

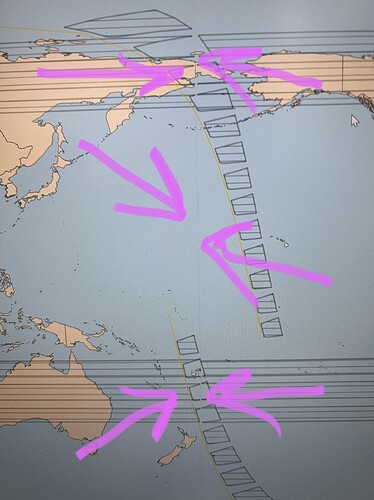

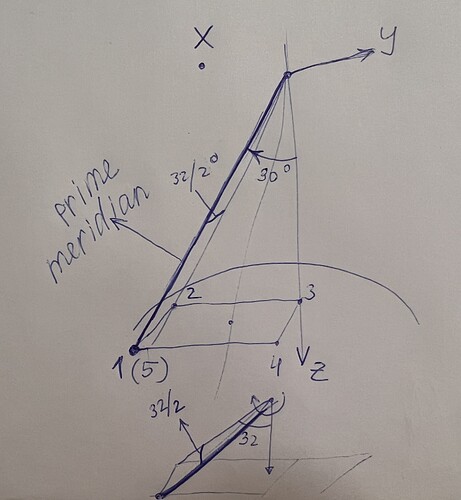

I need to implement the display of shooting on Earth 2 cameras: each of the cameras can be rotated to fixed angles of punging, roll and yawing.

I use this to form one camera with the following code: I would like to turn the direction of the camera - but I rotate the frame. What ways to rotate the camera are possible here and which are more accurate and convenient?

My code: as a result of its execution, the projection display of my camera shifts a little, but I don’t know how to assess whether it’s turned correctly or not.

TopocentricFrame topocentricFrame = new TopocentricFrame(earth, gp_State_Spacacraft), “Camera_1”);

var Rotor = new Rotation(RotationOrder.XYZ, RotationConvention.VECTOR_OPERATOR, FastMath.PI / 6., FastMath.PI / 6.,FastMath.PI / 6.);

Frame myFovFrameRotor = new Frame(topocentricFrame,

new Transform(AbsoluteDate.ARBITRARY_EPOCH,

Rotor),

"myFovFrame");

PolygonalFieldOfView fov = new PolygonalFieldOfView(Vector3D.MINUS_K,

DefiningConeType.INSIDE_CONE_TOUCHING_POLYGON_AT_EDGES_MIDDLE,

Vector3D.MINUS_I,

FastMath.toRadians(angleFOV), 4, 0.0);

Transform topoToBody = myFovFrameRotor.getTransformTo(itrfFrame_for_earth, currentState.getDate());

Transform topoToBody = topocentricFrame.getTransformTo(itrfFrame_for_earth, currentState.getDate());

List<List> resu = fov.getFootprint(topoToBody, earth, FastMath.toRadians(10));