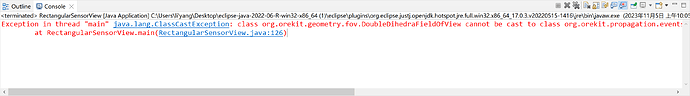

I have attempted to use the method(Stick to the fov attached to your satellite and use a FieldOfViewDetector (see documentation) combined with an ElevationDetector (see documentation) T) Attempted to combine ElevationDetector and FieldOfViewDetector using Boolean Detector. andCombine (java. util. Collection), but I encountered some issues. I don’t know how to call the relevant methods at all. Can you provide specific code? Can you continue to modify based on my code?

Thank you, best wishes!

Below is my source code:

// Initial state definition : date, orbit

final AbsoluteDate initialDate = new AbsoluteDate(2023, 9, 15, 4, 0, 00.000, TimeScalesFactory.getUTC());

final double mu = 3.986004415e+14; // gravitation coefficient

final Frame inertialFrame = FramesFactory.getEME2000(); // inertial frame for orbit definition

final Vector3D position = new Vector3D(2797914.567, -2288195.171, 6012468.374);

final Vector3D velocity = new Vector3D(-6089.132, 2403.774, 3732.121);

final PVCoordinates pvCoordinates = new PVCoordinates(position, velocity);

final Orbit initialOrbit = new KeplerianOrbit(pvCoordinates, inertialFrame, initialDate, mu);

// Earth and frame

final Frame earthFrame = FramesFactory.getITRF(IERSConventions.IERS_2010, true);

final BodyShape earth = new OneAxisEllipsoid(Constants.WGS84_EARTH_EQUATORIAL_RADIUS,

Constants.WGS84_EARTH_FLATTENING, earthFrame);

// Station

final double longitude = FastMath.toRadians(75.9797);

final double latitude = FastMath.toRadians(39.4547);

final double altitude = 0.;

final GeodeticPoint station1 = new GeodeticPoint(latitude, longitude, altitude);

final TopocentricFrame sta1Frame = new TopocentricFrame(earth, station1, "喀什");

// Defining rectangular field of view

double halfApertureAlongTrack = FastMath.toRadians(50);

double halfApertureAcrossTrack = FastMath.toRadians(50);

FieldOfView fov = new DoubleDihedraFieldOfView(Vector3D.MINUS_I, // From satellite to body center

Vector3D.PLUS_K, halfApertureAcrossTrack, // Across track direction

Vector3D.PLUS_J, halfApertureAlongTrack, // Along track direction

0); // Angular margin

// Defining attitude provider

AttitudeProvider attitudeProvider = new LofOffset(inertialFrame, LOFType.EQW);

// Defining your propagator and setting up the attitude provider

Propagator propagator = new KeplerianPropagator(initialOrbit);

propagator.setAttitudeProvider(attitudeProvider);

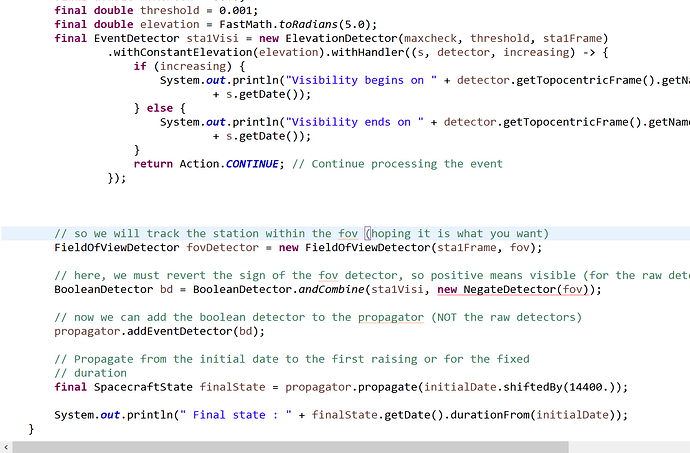

// Event definition

final double maxcheck = 60.0;

final double threshold = 0.001;

final double elevation = FastMath.toRadians(5.0);

final EventDetector sta1Visi = new ElevationDetector(maxcheck, threshold, sta1Frame)

.withConstantElevation(elevation).withHandler((s, detector, increasing) -> {

if (increasing) {

System.out.println("Visibility begins on " + detector.getTopocentricFrame().getName() + " at "

+ s.getDate());

} else {

System.out.println("Visibility ends on " + detector.getTopocentricFrame().getName() + " at "

+ s.getDate());

}

return Action.CONTINUE; // Continue processing the event

});

// Add event to be detected

propagator.addEventDetector(sta1Visi);

// Propagate from the initial date to the first raising or for the fixed

// duration

final SpacecraftState finalState = propagator.propagate(initialDate.shiftedBy(14400.));

System.out.println(" Final state : " + finalState.getDate().durationFrom(initialDate));