Hello everyone!

I am currently working on geolocating pixels for the SPOT 6 dataset using viewing angles (across-track and along-track) for pixel and satellite metadata. The current method I’m following involves:

-

Interpolating Position and Quaternion: Read position, quaternion data from metadata file and interpolate for given timestamp using Lagrange interpolation for position and velocity, Cubic interpolation for quaternion values.

-

LOS Calculation: Computing the Line-of-Sight (LOS) vector in the satellite frame from the viewing angles. Rotating the LOS vector into the ECEF frame using the interpolated quaternion.

-

Calculating Intersection with WGS84 Ellipsoid: Using the satellite’s position and the LOS vector to compute the intersection point on the Earth’s surface. Converting the intersection point to latitude and longitude coordinates.

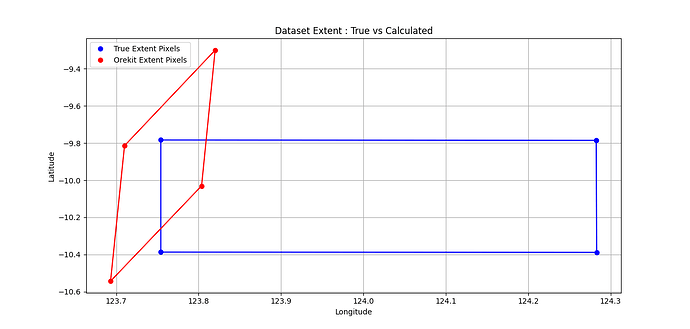

I want to carry out the above steps for the 4 extent pixels (Top-left, Top-Right, Bottom-Left, Bottom-right) to determine the image extent on ground. However, the resulting geolocated pixel coordinates (Latitude, Longitude) are skewed and do not align with the dataset extent provided in the SPOT metadata sheet.

DIM_SPOT6_P_201211100138085_SEN_816007101.XML (164.8 KB)

Has anyone worked with geolocating SPOT Metadata or encountered a similar issue? It would great if someone can provide insights on potential sources of the skewness error. Specifically, I’m wondering if:

- There are additional corrections (e.g., sensor alignment, time offset, post-processing) required for SPOT true extent data.

- The interpolation method is introducing errors.

- The LOS Computation method is incorrect or needs additional transforms

For additional clarity, I am making the following assumptions as mentioned in the SPOT Imagery User Guide :

- All satellite positions, velocities are expressed in Cartesian coordinates in the ECEF/ECF frame considering WGS84 geodetic system

- Quaternion defines the orientation of the sensor focal plane with respect to the ECEF Frame

- Sensor frame is assumed to be aligned with Satellite Body frame

- Pixel viewing angles are assumed to be constant with respect to satellite body frame and do not change over duration of image strip capture

Any guidance, suggestions, or references would be greatly appreciated. Thanks in advance!

# 1. Set up Earth model and frames(WGS84 ellipsoid)

itrf = FramesFactory.getITRF(IERSConventions.IERS_2010, True)

ecef_frame = FramesFactory.getITRF(IERSConventions.IERS_2010, True)

earth = OneAxisEllipsoid(

Constants.WGS84_EARTH_EQUATORIAL_RADIUS,

Constants.WGS84_EARTH_FLATTENING,

itrf

)

utc = TimeScalesFactory.getUTC()

date =AbsoluteDate(2012, 11, 10, 1, 38, 44.983439, utc) # Starting instance of Top Left, Top Right Pixel Capture

# 2. Define satellite position and velocity in ECEF (example values)

position_ecef = Vector3D(-3918478.3, 5767436.663, -1229643.147) # Example position in meters

# 3. Define pixel viewing angles in Satellite Frame

# Pixel Viewing angle - Top-Left Pixel

# theta_a1 = np.radians(-4.35258992715) # Across-track

# theta_l1 = np.radians(-1.78932242564) # Along-track

# Viewing angles (convert to radians) Top-Right Pixel

theta_a1 = np.radians(0.244516008204) # Across-track

theta_l1 = np.radians(-2.76372372096) # Along-track

# 4. Compute Pixel LOS vector in satellite frame

los_sat1 = np.array([

np.tan(theta_a1),

-np.tan(theta_l1),

1

])

los_body = Vector3D(float(los_sat1[0]), float(los_sat1[1]), float(los_sat1[2])) # Create Vector3D object

# 5. Input Quaternion

quaternion_w = float(quat[0]) # Get Interpolated Quaternion at timestamp

quaternion_x = float(quat[1])

quaternion_y = float(quat[2])

quaternion_z = float(quat[3])

q0,q1,q2,q3 =quaternion_w,quaternion_x,quaternion_y,quaternion_z

# Compute Rotation matrix derived from the quaternion to transform from Satellite to ECEF Frame

R = np.array([

[1 - 2 * (q2**2 + q3**2), 2 * (q1 * q2 - q0 * q3), 2 * (q1 * q3 + q0 * q2)],

[2 * (q1 * q2 + q0 * q3), 1 - 2 * (q1**2 + q3**2), 2 * (q2 * q3 - q0 * q1)],

[2 * (q1 * q3 - q0 * q2), 2 * (q2 * q3 + q0 * q1), 1 - 2 * (q1**2 + q2**2)]

])

# 6. Rotate LOS Vector from Satellite to ECEF Frame by multiplying with Rotation Matrix

vector = los_sat1

los_ecef = np.dot(R, vector)

los_ecef = Vector3D(float(los_ecef[0]), float(los_ecef[1]), float(los_ecef[2]))

# 7. Build the intersection line using satellite position and rotated LOS in ECEF

line = Line(position_ecef,

position_ecef.add(los_ecef),

1e-10

)

# 8. Intersect the line with Earth

intersection = earth.getIntersectionPoint(

line, # The line of sight

position_ecef, # Satellite position

ecef_frame, # Reference frame

date # Absolute date

)

# Extract geolocation coordinates

if intersection is not None:

latitude = degrees(intersection.getLatitude())

longitude = degrees(intersection.getLongitude())

altitude = intersection.getAltitude()

print(f"Geolocation - Latitude: {latitude:.6f}, Longitude: {longitude:.6f}, Altitude: {altitude:.2f} meters")

else:

print("No intersection found with the Earth surface.")